With over 500 transmission substations and 16,400 miles of power lines, the transmission group at Tennessee Valley Authority (TVA) has plenty of assets to manage — and analytics is one of the go-to tools for the job. To deliver quality decision-making support, “It’s all about the data and the process,” says Neelanjan Patri, manager of TVA’s Operational Technology and Analytics group. He adds that people are an integral part of data collection and must be included from the start.

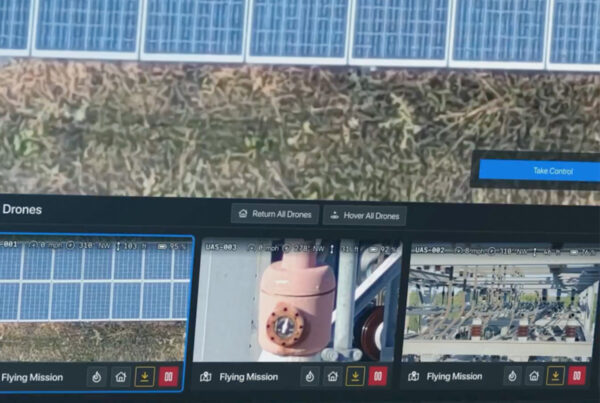

A showcase of how data and analytics are being used in transmission is TVA’s Asset Performance Center (APC) platform. The APC provides information and decision-making support to the operations team in the transmission organization. The APC platform uses a data-fabric architectural approach, something Gartner defines as a design concept that serves as “an integrated layer (fabric) of data and connecting processes.” [1]

Stakeholders and SMEs

Since data can support multiple analytics solutions, it should be stored in a way that provides easy access, Patri says. For some solutions, TVA pulls source data from multiple source systems into a common repository, which requires collaboration between those who own and manage the data and those who are part of the data collection.

“If I’m just automating data collection for my own work, it’s easy,” Patri notes. “The hard part is automating at the enterprise level because of the large number of users, processes and systems. You have a diverse set of stakeholders, and you need to make sure there’s agreement.”

Patri advocates for getting users and subject-matter experts involved at the inception of any project. “Involving users upfront rather than having them be on the receiving end makes a huge difference,” he says. “When people see their input reflected in a bigger enterprise system, they’re more invested in the solution and likely to promote the system to peers.”

Watching the details

Patri notes that during the data collection process, consistency is critical — regardless of what data collection system is used. For instance, 20 or more data attributes can contribute to transformer health, so they must be factored into predictive models. These attributes include basic data about an asset, such as model number, installation date, maximum load capacity, and other nameplate data likely stored in the work management system. There is also testing and real-time performance data, which may be stored elsewhere.

“The way you identify the asset using a unique identifier in the work management system has to be the same as the way you identify the asset in the testing database,” Patri says. He also notes that the statement is true for any system containing data about the asset, including financial systems, which come into play when calculating the risks and costs involved in asset failure.

Another data-quality issue Patri identifies is inconsistent historical data, which is a challenge across industries. “For example, we didn’t have a consistent way of collecting failure data in the past because we didn’t have a common set of failure codes throughout the utility service area,” Patri recalls.

Standardized data capture

TVA standardizes data capture by getting buy-in from those in data-capture roles, such as maintenance and testing crews for asset-health analytics. Rather than letting inputs be determined by tribal knowledge, the utility tries to get users to agree on best practices. Then the analytics team captures the right set of data to support the implementation of those best-practice analytics.

“There is a tendency to come up with a process based on what people are working with,” Patri notes. “First, define what the process should be for the solution you are trying to come up with based on the holistic picture. To do this, we need to get the relevant stakeholders together and make sure there’s agreement.”

He adds that a recommendation from an asset vendor based on its asset and industry best practices helps to gain buy-in. It provides a common understanding among stakeholders of the model being used and the mandatory fields used in the data-collection tools of various systems.

TVA’s tools for the job

For data governance, TVA uses Collibra, a data governance tool that helps utilities implement data standards and policies to ensure data consistency and integrity. It also stores metadata that helps to make data more usable and promotes a single version of the truth for the data. TVA is still integrating the tool into its data collection processes.

TVA’s APC platform is powered by Hitachi Lumada. This application helps to calculate asset health and identifies risk exposure, aiding in asset planning. Multiple teams use this information to develop work plans on how an asset needs to be maintained or replaced as well as various asset analytics outcomes. The TVA staff has also automated the feed to this database from the utility’s systems of record, including Maximo.

Mulesoft is the enterprise integration tool TVA uses to extract, transform and load (ETL) data from source systems to the centralized platform. It integrates data from systems such as Maximo, Doble and eDNA (TVA’s historian) to Lumada.

In the end, though, it is often the people who work with the assets and the related source systems who determine the data quality or lack thereof. They are the ones who need to record data correctly for all the systems that automatically feed into the analytics data fabric. So, along with the buy-in, Patri makes user education a priority. “It’s always part of the process,” he says.

Betsy Loeff is a Colorado-based freelance writer who specializes in B2B content creation and trade journalism. Her business, Write Results, serves publications and technology companies focused on the utilities sector.

Betsy Loeff is a Colorado-based freelance writer who specializes in B2B content creation and trade journalism. Her business, Write Results, serves publications and technology companies focused on the utilities sector.

Tennessee Valley Authority (TVA) is a utility member of Utility Analytics Institute.

References:

[1] Gupta, Ashutosh. “Data Fabric Architecture is Key to Modernizing Data Management and Integration.” Gartner, 11 May 2021. https://www.gartner.com/smarterwithgartner/data-fabric-architecture-is-key-to-modernizing-data-management-and-integration. Accessed 10 Oct 2023.

UAI members can download the article here: Data Collection for Asset Management: People are Key to the Process (must be logged in to access).